HAProxy - a tale of two queues

In 2020 many services saw a drastic spike in their traffic. We saw almost 5-8x sustained higher traffic than our previous max traffic peaks. This lead to our HAProxy instances struggling to keep up.

This article summarizes the investigation and learning around queueing+networking tuning for HAProxy servers with a focus on throughput.

There are two interesting queues at play in any Haproxy server:

Per HAProxy frontend: max connections waiting in that queue can be up to maxconn setting.

In this example configuration, the maxconn for connections to the website frontend is capped at 20000.

When this limit is hit, new connections destined for website will queue up in the socket queue maintained by the kernel.

global

maxconn 60000

frontend website

maxconn 20000

bind :80

default_backend web_serversNew connections: SYN backlog queue maintained by the OS (lower level tcp stack).

Documentation

This is queue that takes over then the HAProxy maxconns get saturated.

tcp_max_syn_backlog - INTEGER

Maximal number of remembered connection requests, which have not

received an acknowledgment from connecting client.

The minimal value is 128 for low memory machines, and it will

increase in proportion to the memory of machine.

If server suffers from overload, try increasing this number.For example, we can use systcl to check (and set) the current value of this:

# Check

sysctl net.ipv4.tcp_max_syn_backlog

# Update

sysctl net.ipv4.tcp_max_syn_backlog = 100000Once HAProxy queues fill up, it stops accepting new requests from the tcp socket so the OS starts queueing connections in the syn backlog queue.

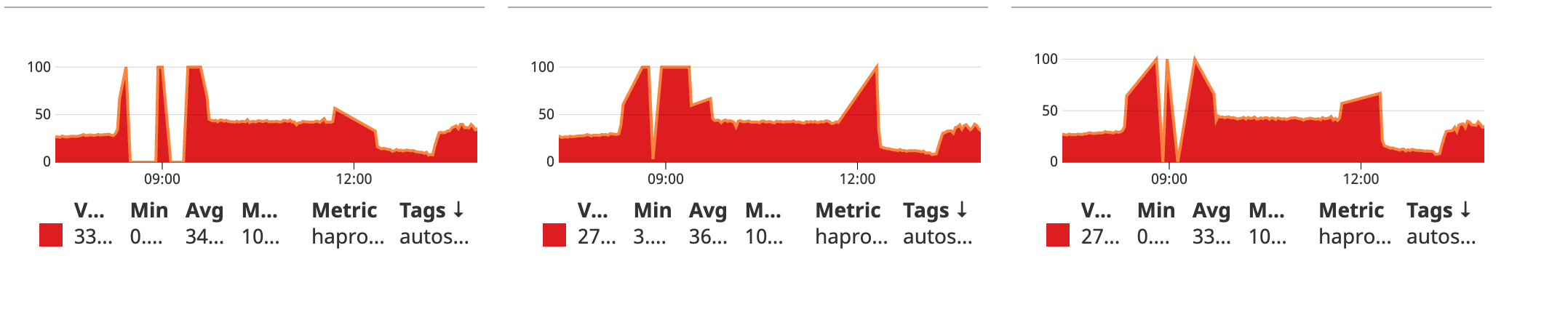

You can see when HAProxy hits 100% session limits followed by a big drop of connection handling capabilities.

Pseudo-code on how TCP works:

Each tcp connection does a 3-way handshake. A new socket on a server running HAProxy goes through the following steps in pseudo-code:

socket.State := LISTEN

for {

switch clientPacket {

case SYN: // client wants to connect

socket.State = SYN_RECEIVED

socket.Send(SYN_ACK)

synQueue.append(socket)

case ACK: // client ACKS our SYN_ACK

socket.State = ESTABLISHED

synQueue.remove(socket)

acceptQueue.append(socket)

HaproxyApplication.offerSocket(socket)

}}Once both the application level queue (HAProxy) and the syn queue fill up, the server starts dropping connections that are waiting for client ACK at the socket queue level.

See http://veithen.io/2014/01/01/how-tcp-backlog-works-in-linux.html for an explanation of how the queue works.

Verifying that the instance is under duress

Now that we know how the server handles connections and queues, we can verify our intuition about the state of the system by looking at metrics and inspecting the state of the socket connections.

Here are two handy scripts inspect the state of TCP stack on the system:

netstat -ant | awk '/ESTABLISHED|LISTEN|.*_WAIT|SYN_RECV/ {print $6}' | sort | uniq -c | sort -n

# output summaries the counts of sockets in various states

3 LISTEN

9 SYN_RECV

41 ESTABLISHED

225 TIME_WAITAnother good command to inspect the status of various TCP queues is:

# display networking statistics

netstat --statistics

# output with dropped connections

TcpExt:

450 SYN cookies sent

3627 SYN cookies received

413 invalid SYN cookies received

13923 TCP sockets finished time wait in fast timer

6 packetes rejected in established connections because of timestamp

21543 delayed acks sent

21869 times the listen queue of a socket overflowed

21869 SYNs to LISTEN sockets dropped

# more...In the above example, we have a large counter for SYNs to LISTEN sockets dropped confirming that newer connections that were not yet accepted by HAProxy (due to maxconn being full)

were overflowing the system’s syn backlog queue as well.

What settings do we tweak?

Before making any changes, make sure you have proper telemetry setup so that you can measure the impact of the changes.

If Haproxy’s CPU && MEM usage is below high-watermark thresholds

This means we can increase maxconn - remember this does increase Memory pressure too.

HAProxy uses 2 network sockets for every incoming connection.

One for the incoming client connection and one for the outgoing connection to the backend.

Since each connection uses about 16 KB of memory, earmark 32 KB per actual end-to-end connection.

The settings for net.ipv4.tcp_max_syn_backlog can also be increased but it’s ideal to increase the number of backend servers or increase their throughput.

If increasing tcp_max_syn_backlog, be careful (

man page

):

If the backlog argument is greater than the value in /proc/sys/net/core/somaxconn, then it is silently truncated to that value; the default value in this file is 128. In kernels before 2.4.25, this limit was a hard coded value, SOMAXCONN, with the value 128.Set leading alarms that keep an eye on when

maxconnis trending close to max.

If Haproxy’s Mem usage is saturated

The server instance needs more resources.

Assume the server is holding on to 2k+ 100k => 102k TCP connections total (maxconn + syn backlog).

At 32KB per connection:

Total memory needed to hold connections:

32*102000/1024/1024 = 3.11 GBNote that this is purely for holding connections open - HAProxy will likely have internal datastructures associated with each connection that will contribute to higher actual memory usage.

There are 3 ways to proceed here:

- Run the application on a large instance

- Reduce the queue sizes and risk dropping connections

- Speed up/Scale up backend servers so that throughput increases and we can service a large volume of connections in a shorter amount of time

Future

In HAProxy 1.9 and newer, we can set priority on the incoming HTTP requests that are waiting in the queue.

example:

backend web_servers

balance roundrobin

acl is_checkout path_beg /checkout/

http-request set-priority-class int(1) if is_checkout

http-request set-priority-class int(2) if !is_checkout

timeout queue 30s

server s1 backend1:80 maxconn 30

server s2 backend2:80 maxconn 30

server s3 backend3:80 maxconn 30Lower numbers are given a higher priority. Setting priority is a good way to ensure that more important requests like login, checkout etc are serviced first in the queue.